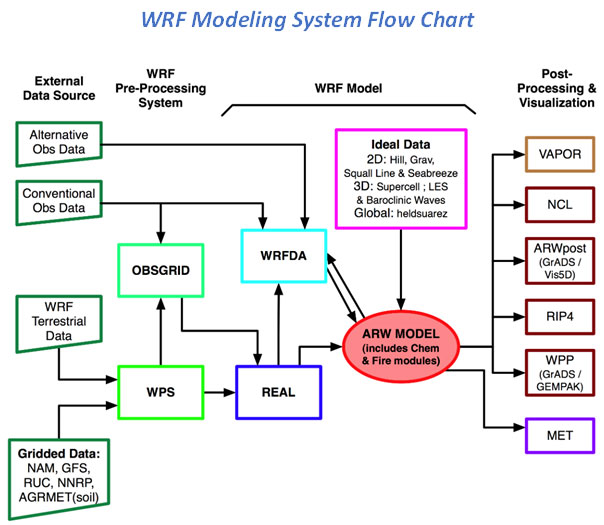

The weather research and forecasting (WRF) model (https://www.mmm.ucar.edu/weather-research-and-forecasting-model) is a numerical weather prediction (NWP) system designed to serve both atmospheric research and operational forecasting needs. The model serves a wide range of meteorological applications across scales ranging from meters to thousands of kilometers and allows researchers to produce simulations reflecting either real data (observations, analyses) or idealized atmospheric conditions. WRF provides operational forecasting a flexible and robust platform and is used extensively for research and real-time forecasting throughout the world.

Building WRF: A Guide

If you want to run WRF simulations, you must compile and build all WRF programs yourself. For more details about how to compile WRF, you could refer to the great article – “How to Compile WRF: The Complete Process”. Based on the suggestions listed therein, I have summarized below the process of building your WRF.

System Environment Test

- First and foremost, it is very important to have C and Fortran compilers such as GNU C / Fortran or Intel C / Fortran. Any user needs to test whether these exist on the HPC cluster system. If not, they need to ask their system administrators to install those software.

- In addition to the compilers required to manufacture the WRF executables, the WRF build system has scripts written by bash, csh and perl as the top level for the user interface. Finally, inside these scripts are quite a few system commands that are available regardless of which shell is used. If not, they need contact their system administrators to make them ready.

Building Libraries

- Depending on the type of run you wish to make, there are various libraries that should be installed. The NetCDF with several compression libraries are mandatory and additional libraries are also necessary if you want to enable some features in building your WRF. For example, the MPI library is also necessary if you are planning to build WRF in parallel.

- In principle, any implementation of the MPI-2 standard should work with WRF; however, you may take a lot of time in dealing with the optimized combination of various MPI implementation such as OpenMPI, MPICH, MVAPICH2 with different compilers for compilation and performance.

Library Compatibility Tests

- Once the target system is able to make small Fortran and C executables and after the NetCDF and MPI libraries are constructed, you need to verify that the libraries are able to work with the compilers that are to be used for the WPS and WRF builds.

- It is important to note that these libraries must all be installed with the same compilers as will be used to install WRFV3 and WPS.

Building WRFV3

- After ensuring that all libraries are compatible with the compilers, you can now prepare to build WRFV3. You need to download WRF tar files and unpack them in your building directory and run the ‘configure’ script to create the configuration file – configure.wrf.

- Once your configuration is complete, you may review or even revise it before you are ready to compile WRF with choices for the specific case you wish to use.

- Compilation should take about 20-30 minutes. Once the compilation completes, you need to look for four executables to check if they were built successfully.

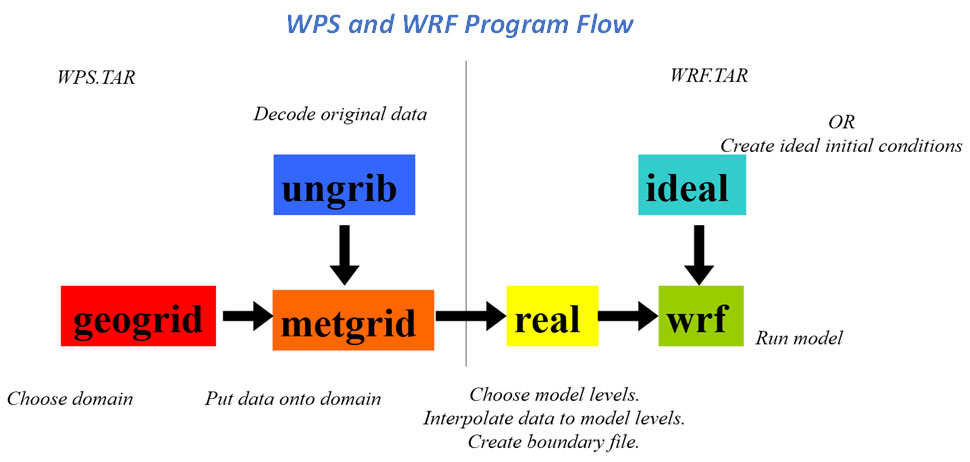

Building WPS

- After the WRF model is built, the next step is building the WPS program and tools. The WRF model MUST be properly built prior to trying to build the WPS programs.

- Run the ‘configure’ script to create the configuration file – configure.wps and revise it with appropriate settings for those related libraries.

- If the compilation is successful, there should be 3 main executables in the WPS top-level directory.

Static Geography Data

- The WRF modeling system is able to create idealized and real-data simulations. To initiate a real-data case, you need to prepare for some important input data sets such as geog.

- Keep in mind that if you are downloading the complete dataset, the file is very large. If you are sharing space on a cluster, you may want to consider placing this in a central location so that everyone can use it, and it’s not necessary to download for each person.

Real-time Data

- For real-data cases, the WRF model requires up-to-date meteorological information for both an initial condition and also for lateral boundary conditions. You have to download these data yourself. You will probably end up writing a short script to download and preprocess these data automatically to shorten the initialization time.

Run WPS and WRFV3

- You should know basic instructions for running WPS and WRFV3. For more detailed information, you can refer to the WRF-ARW Online Tutorial.

- You need to run several WPS programs first to generate all input files used for running WRF. Then you are ready to run the ‘real.exe’ and ‘wrf.exe’ in sequence to run the simulation for your case.

- In most of HPC cluster system, you can’t directly run your simulation on any nodes interactively. You need to prepare for these job scripts and submit them in batch manner. These job scripts need to meet the compliance specified by the resource manager and job scheduling solution installed on the HPC cluster system and follow policies ruled by administrators.

An Easier Alternative?

As you can see, it can be a lengthy, complex process to build and run WRF simulations on your own. Is there any way to shorten this process for WRF end users?

Much of the difficulty lies in compiling WRF from its source code through a series of tedious and time-consuming steps to make it ready to run on a specific HPC cluster platform, for most novices or researchers unfamiliar with the HPC computer system environment. The steps include ensuring the computer environment is set up correctly, testing the components and their compatibility with each other, then installing WRFV3 and WPS, and finally preparing to run WPS and then WRFV3.

Upon investigation, we found that many users spent most of their time dealing with compiling WRF and preparing for the job scripts to run their simulations. So we tried to build WRF and all required libraries with our best practices in optimizing performance and simplifying operations, and provide the pre-compiled and pre-optimized WRF modules for users to run their simulations on the QxSmart HPC/DL Solution, QCT’s in-house HPC system.

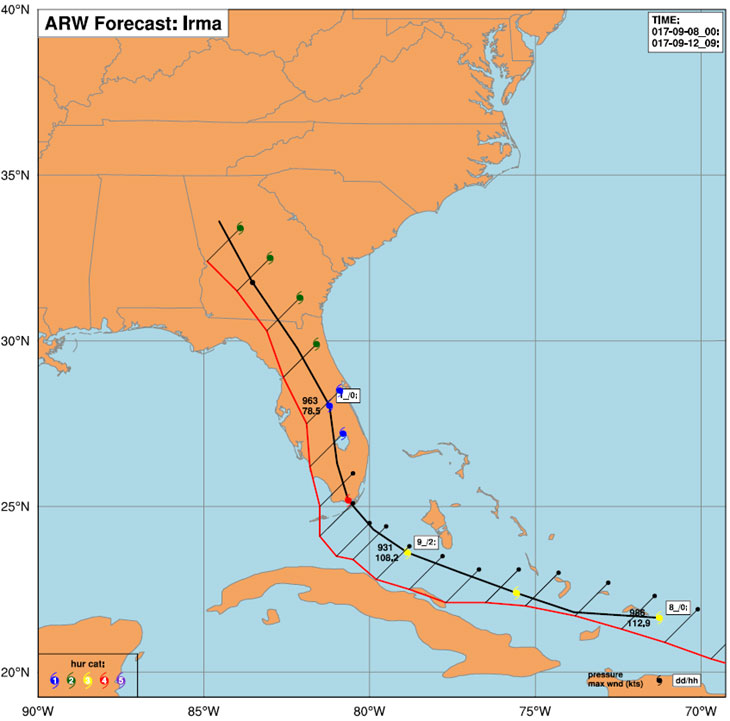

Test Simulation on QCT HPC System: Hurricane Irma

Hurricane Irma (https://en.wikipedia.org/wiki/Hurricane_Irma) was an extremely powerful and catastrophic hurricane and was also the most intense Atlantic hurricane to strike the United States since Hurricane Katrina (https://en.wikipedia.org/wiki/Hurricane_Katrina) in 2005.

In this case study, we tried to build WRF 3.9.1.1 model and run the simulation for the period 2017-09-08_00 to 2017-09-13_00 on the QCT HPC system and use the scientific data analysis and visualization tool – NCL (https://www.ncl.ucar.edu/index.shtml) to generate high-quality graphics for research. We also tried to plot the tracks by comparing the observed and simulated data.

- [Step 1] Just use pre-compiled and optimized modules for RUNNING WRF

- module purge

- module load dot

- module load wrf/3.9.1/intelmpi-2018/intel-18.0/wps_netcdf4

- module load wrf/3.9.1/intelmpi-2018/intel-18.0_nochem_vortex

- [Step 2a] Prepare for working directory and model data (Irma and SST)

- Create a dedicated directory as the working space for this case

- Get input data for running WPS and put them into the working directory

- Modify the configuration file – namelist.wps and run link_grib.csh script and ungrib.exe to set up input data files.

- Run geogrid.exe and metgrid.exe to define the simulation domains and generate the intermediate-format meteorological data, respectively

- [Step 3] Run WRF to start case simulation

- Review or modify settings listed in the configuration file – namelist.input for running WRF model

- Just submit auto-generated job scripts – runreal.job and runwrf.job for running to start running model simulation via a single batch command (qsub)

- [Step 4] Use Pre-installed tools such as NCL to generate visualized plots to analyze results.

|

|

The pre-compiled and pre-optimized WRF modules we created takes care of the groundwork that precedes running the simulation, removing much of the time and hassle that revolves around preparing the source code and job scripts. Furthermore, we built these modules optimized to our own HPC infrastructure, ensuring that everything runs compatibly within the HPC environment. QCT’s HPC system provides WRF end users a platform that lets them skip the legwork and get straight to running simulations; in a world where climate patterns are becoming more volatile, running WRF and getting forecasts as close to real time as possible is more critical than ever before.

Learn more about the QxSmart HPC/DL Solution to see in detail all the ways QCT’s optimized HPC infrastructure can help end users, developers and administrators accelerate scientific computing.