QCT’s upcoming QuantaGrid, QuantaPlex, and QuantaEdge servers will support the new NVIDIA L4 Tensor Core GPUs to fill the increasing demand for accelerated computing, which has the capability of making people’s lives more convenient and experiences more immersive.

The NVIDIA L4 Tensor Core GPU, based on the Ada Lovelace architecture, was introduced this week at NVIDIA GTC. The L4 GPU is the successor to the NVIDIA T4 GPU, which was introduced almost four years ago with 16GB of GDDR6 memory and a 70W maximum power limit to accelerate the full diversity of modern AI use cases across cloud workloads, including high-performance computing, deep learning training and inferencing, machine learning, data analytics, and graphics. Now enters a new challenger with fourth-generation Tensor Cores, third-generation Ray Tracing (RT) Cores, shader execution reordering (SER), AV1 encoders, Deep Learning Super Sampling (DLSS 3) technology, and 24GB of GDDR6 memory in a 72W power envelope to tackle a broad range of artificial intelligence (AI) applications.

NVIDIA L4 Tensor Core GPU

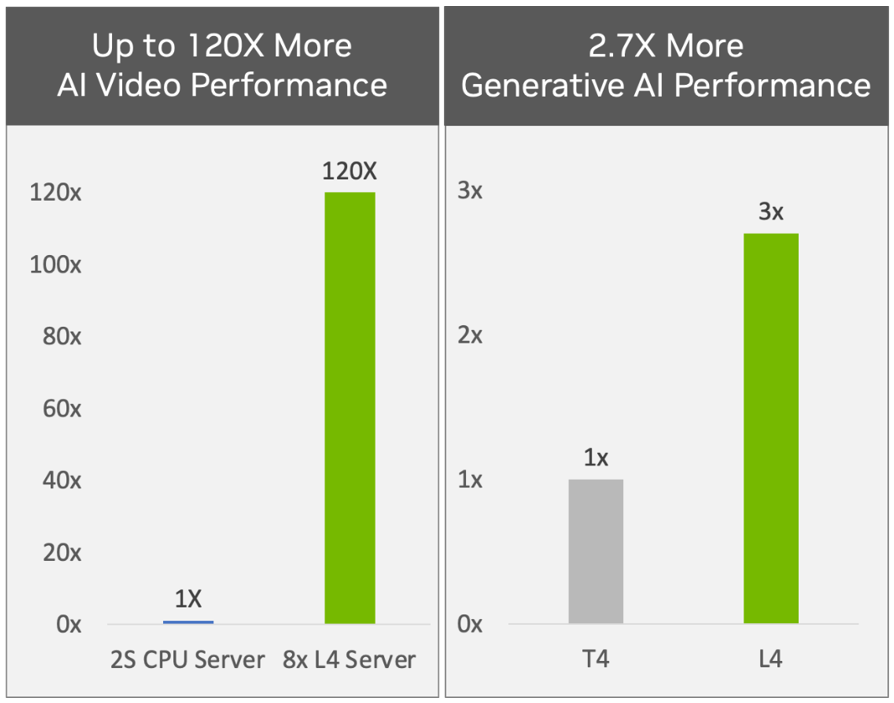

The NVIDIA L4 Tensor Core GPU is based upon the Ada Lovelace architecture and built on the 4nm manufacturing process. According to NVIDIA, servers equipped with the L4 GPU enable up to 120x higher AI video performance over CPU solutions, while providing 2.7x more generative AI performance (see Fig. 1 below) and over 4x more graphics performance versus the previous generation. The versatile L4 GPU comes in an energy-efficient PCIe single-slot low-profile form factor that makes it ideal for edge, cloud, and enterprise deployments.

Figure 1. NVIDIA L4 GPU boosts video and AI performance over T4

With the demand for more personalized services, we are seeing a variety of AI-powered applications and products, and QCT is leveraging this technology for AI inference to recognize images, understand speech, and make recommendations for healthcare and 5G applications in the cloud and at the edge. With the NVIDIA L4 GPU, QCT systems can improve visual computing, graphics, virtualization, and a plethora of experiences by delivering more generative AI performance than the previous generation. And as a universal, energy-efficient, workload accelerator, the NVIDIA L4 GPU can boost performance on all types of AI models to cover more use cases than before, as this cannot be done with CPUs alone.

NVIDIA OVX Systems

Additionally, QCT is also supporting the third generation of NVIDIA OVX systems, which builds upon the capabilities of previous generations with next-generation technology and a new, balanced, architecture design with NVIDIA L40 GPUs, also powered by the Ada Lovelace architecture, and the NVIDIA Bluefield-3 DPU. NVIDIA OVX 3.0 is optimized for performance and scale to accelerate the next generation of digital twins and hyperscale Omniverse workloads.

QCT has previously built immersive digital twin simulations on its systems with NVIDIA Omniverse[A1] Enterprise — a scalable, end-to-end platform enabling enterprises to build and operate metaverse applications — to help its customers stay ahead in their digital transformation journey. QCT created a photorealistic digital twin of one of its own factory production lines, leveraging NVIDIA Omniverse to simulate workflows and other logistics to be tested for efficiency before actual implementation, delivering time and cost savings while improving flexibility and precision.

Follow QCT on Facebook and Twitter to receive the latest news and announcements.