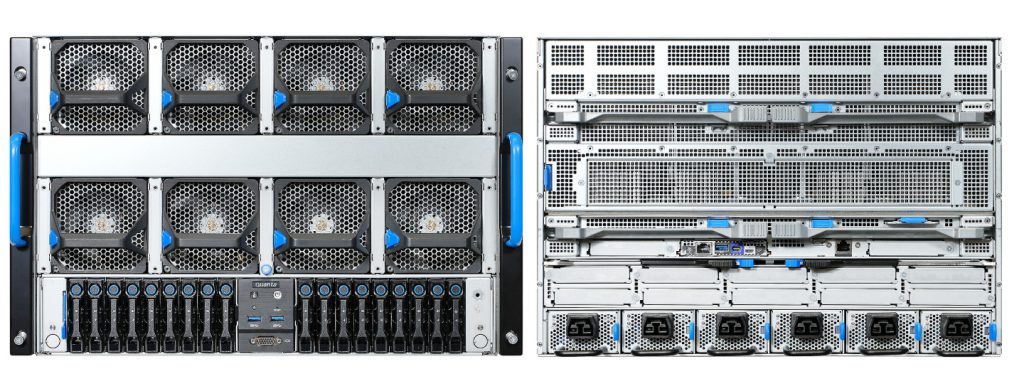

In the ever-evolving landscape of artificial intelligence and high-performance computing (HPC), QCT continues to lead the charge with cutting-edge infrastructure solutions. At the heart of this innovation lies the QCT QuantaGrid D74H-7U, a powerhouse server purpose-built to tackle the most demanding AI workloads—from large language models (LLMs) to generative AI and scientific computing.

The QuantaGrid D74H-7U is engineered for extreme AI-HPC workloads. It features:

- 8x NVIDIA HGX H200 GPUs in SXM5, each with 141GB of HBM3e memory

- 32 DDR5 DIMM slots

- Support for Nonblocking NVIDIA Magnum IO GPUDirect RDMA and GPUDirect® Storage

- 18x All-NVMe drive bays for high-speed data access

- 10x OCP NIC 3.0 slots for ultra-fast networking, supporting NVIDIA ConnectX SuperNICs

The architecture is designed around a toolless modular philosophy. The modular design of this 7U system allows for major components in the system chassis to be removed and replaced without completely unmounting it from the rack for better serviceability and increased system uptime. This innovative design allows for forward support of next generation CPUs and GPUs with a tool-less architecture that simplifies maintenance and upgrades.

The MLPerf Training benchmark suite measures how fast systems can train models to a target quality metric. In the latest MLPerf Training results, QCT QuantaGrid D74H-7U delivered impressive performance metrics across several key benchmarks, including:

- DLRM for deep learning recommendation models

- Llama 2.0 70B for LLM finetuning

- R-GAT for graph neural networks (GNN)

- RetinaNet for object detection

These results underscore the system’s ability to accelerate massive datasets, AI models, and complex model architectures with efficiency and speed. The combination of NVIDIA H200 GPUs and QCT’s optimized hardware design ensures minimal latency and maximum throughput, making it ideal for enterprise-scale AI training.

The NVIDIA H200 GPUs bring a significant leap in memory capacity and bandwidth, enabling real-time inference and training of trillion-parameter models. With NVIDIA NVLink interconnects and HBM3e memory, these GPUs deliver the performance needed for next-gen AI applications.

QCT QuantaGrid D74H-7U leverages this power to support:

- Generative AI workloads

- Large-scale LLM training

- Scientific simulations

- Enterprise AI deployments

QCT’s participation in MLPerf benchmarks reflects its commitment to transparency and performance leadership. By openly sharing results, QCT empowers customers to make informed infrastructure decisions backed by standardized metrics.

As AI continues to evolve, QCT remains at the forefront—ready to support the next wave of innovation with systems like the D74H-7U. Whether you’re building a scalable AI data center or deploying high-performance computing clusters, QCT’s MLPerf-validated platforms offer the reliability and power needed to stay ahead in the AI race. View the full MLPerf v5.1 results here.